Back

Back

Multi-Instrument Music Generation

The project aimed to use deep learning techniques to generate multi-instrumental music compositions by combining LSTM, BiLSTM, and GRU layers in a hybrid neural network. Python and Keras were chosen for flexibility, and a dataset of 400 MIDI files was used for training. The model featured an intricate architecture with variable-length notes, multiple instrument options, and dynamic tempo control. Impressive results were achieved, with a 98% accuracy rate and minimal loss, showcasing the team's expertise in advanced technologies and their commitment to innovation in music generation.

Project Objective

The Challenge:

In the realm of music composition and generation, the challenge was to harness the power of deep learning to create multi-instrumental music. The task involved training a hybrid neural network that combined LSTM, BiLSTM, and GRU layers to generate music compositions that span multiple instruments. The goal was to predict sequential musical notes as output, enriching the compositions with a variety of musical instruments.

Complexity and Innovation:

The complexity of this task was akin to weaving a symphony, and rapid results were essential. The project aimed to push the boundaries of music generation technology and meet the client's expectations for innovative, multi-instrumental music generation.

The Process

Client Collaboration:

The project kickoff began with comprehensive face-to-face meetings with the client to gain insights into their musical aspirations and objectives.

Technology Stack:

In selecting the technology stack, we opted for Python and the Keras library due to their flexibility and extensive support for deep learning tasks. These choices allowed us to create a versatile and efficient neural network model for music generation.

Dataset:

To achieve this goal, a dataset of MIDI files was utilised. MIDI (Musical Instrument Digital Interface) files are a standardised electronic format for representing music. These files contain information about musical notes, their timing, and instrument usage. The project focused on a subset of this dataset, specifically 400 MIDI files, for model training and testing.

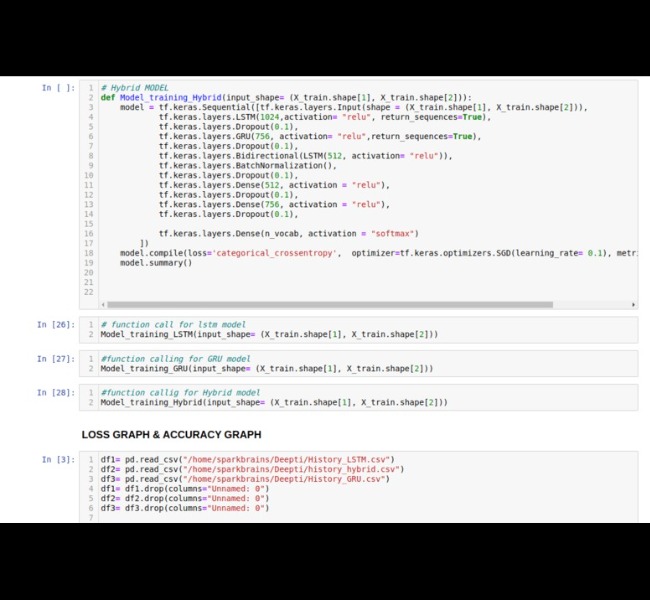

Model Architecture:

The neural network model serves as the project's core and features a sophisticated architecture to capture the intricacies of multi-instrument music generation:

Input Layer: The model commences with an input layer that processes sequences of musical notes as input. Each input sequence encompasses information about a set of musical notes.

LSTM Layer: The initial layer is an LSTM layer, a type of recurrent neural network (RNN) designed to capture long-term dependencies in sequential data. It is tailored to discern musical patterns and sequences.

Dropout Layer: To mitigate overfitting, a dropout layer with a dropout rate of 0.1 is incorporated. Dropout randomly deactivates a fraction of input units during training, enhancing the model's generalisation.

GRU Layer: The subsequent layer is a GRU layer, another type of recurrent layer known for learning dependencies between time steps in sequences.

BiLSTM Layer: This layer is a Bidirectional LSTM, capable of processing sequences in both forward and backward directions. It enhances the model's ability to capture comprehensive context and dependencies in the music.

Batch Normalisation Layer: Batch normalisation is applied to stabilise and expedite the training process by normalising the layer's inputs.

Dense Layers: Multiple dense layers with ReLU activation functions are included to deepen the network and extract high-level features from the input data.

Output Layer: The final layer of the model consists of 230 neurons, representing the unique musical notes that the model can predict.

Generating Music:

Upon model training, it can be employed to generate music compositions. Users specify desired inputs such as music length and instrument preferences. The model subsequently generates sequences of musical notes based on these inputs. Key functionalities include:

Variable-Length Notes: Users can specify the number of notes they desire in the output, allowing flexibility in music length.

Multiple Instruments: Users can opt to include multiple instruments in their compositions, creating a diverse musical ensemble. Instrument selection can be made from a predefined list or tailored to user preferences.

Dynamic Tempo Control: Users have the option to adjust the tempo (speed) of the generated music to evoke different musical moods, with a range of tempo values available.

The Results

The project results demonstrate the successful application of advanced deep learning techniques to multi-instrumental music generation. Throughout our dedicated work on the music generation project, our team attained a noteworthy accomplishment, achieving a remarkable 98% accuracy and a minimal 0.0522 loss rate with our hybrid model. This achievement reflects our profound expertise in utilising advanced technologies such as Keras, TensorFlow, and neural networks.

The knowledge and techniques we have gained during this project have significantly enriched our skill set. We are now well-equipped to apply these valuable insights and methodologies to future endeavours with similar objectives. Our commitment to excellence and relentless pursuit of innovation have been evident throughout this endeavour, reaffirming our dedication to delivering exceptional results.

Visual Designs

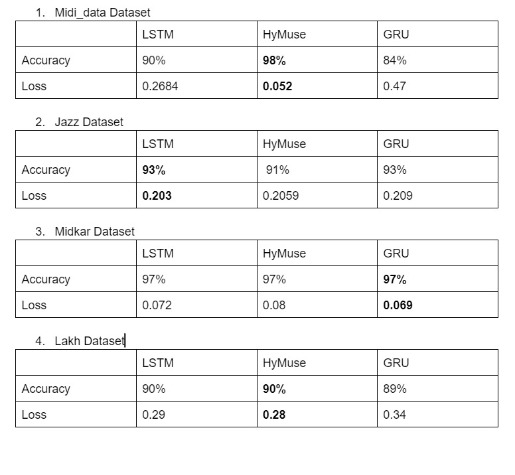

Comparative Analysis of Loss and Accuracy Across Different Datasets for a Model

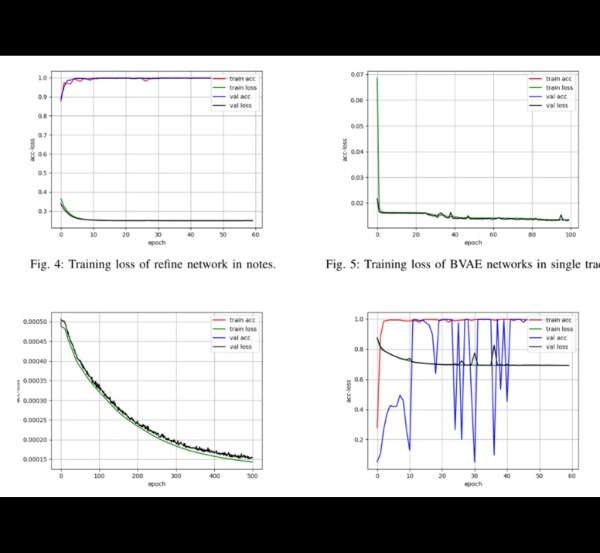

Model Training Results

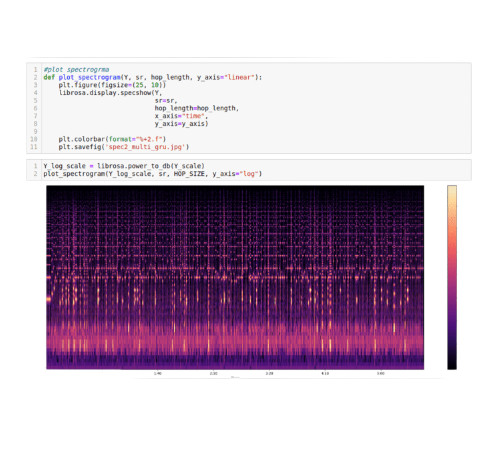

Generating Spectrogram for MIDI Music Output

Primary Model Training Code

TECHNOLOGIES USED

Python

Matplotlib

TensorFlow